One of the many issues with LTE Circuit Switched Fall Back (CSFB) is what happens when the UE falls back on the target RAT (can be 3G or 2G, operator defined) and the LAC is not the same as the one the UE is registered on through the Combined Attach procedure in LTE. This typically occurs on the borders of Location Areas (LA) and Tracking Areas (TA) or when the target layer is 3G and the UE can only acquire 2G (e.g. indoors).

In these cases, a Mobile Originated CS call would experience a delay as the UE would first have to perform a LAU procedure on the target RAT followed by the subsequent call setup procedure.

A Mobile Terminated CS call on the other hand would fail as the call has already been routed to the "old" MSC (i.e. the one the UE registered on though the Combined Attach procedure) but the UE finds itself in the LAC of a different, "new", MSC.

To prevent this happening two 3GPP defined procedures can be re-used. The first one is called Mobile Terminated Roaming Retry (defined in rel.07) and the second one is called Mobile Terminated Roaming Forwarding (defined in rel.10). Both of these require an upgrade to the CN elements involved in the call setup procedure.

It is interesting to note, that both of these procedures were defined originally to handle the (rare) occasion of a UE being paged in one LA but at the same time moving through a LA border and thus performing a LAU procedure on a different MSC than the one being paged on.

For this post we will have a closer look at the Mobile Terminated Roaming Retry (MTRR) procedure.

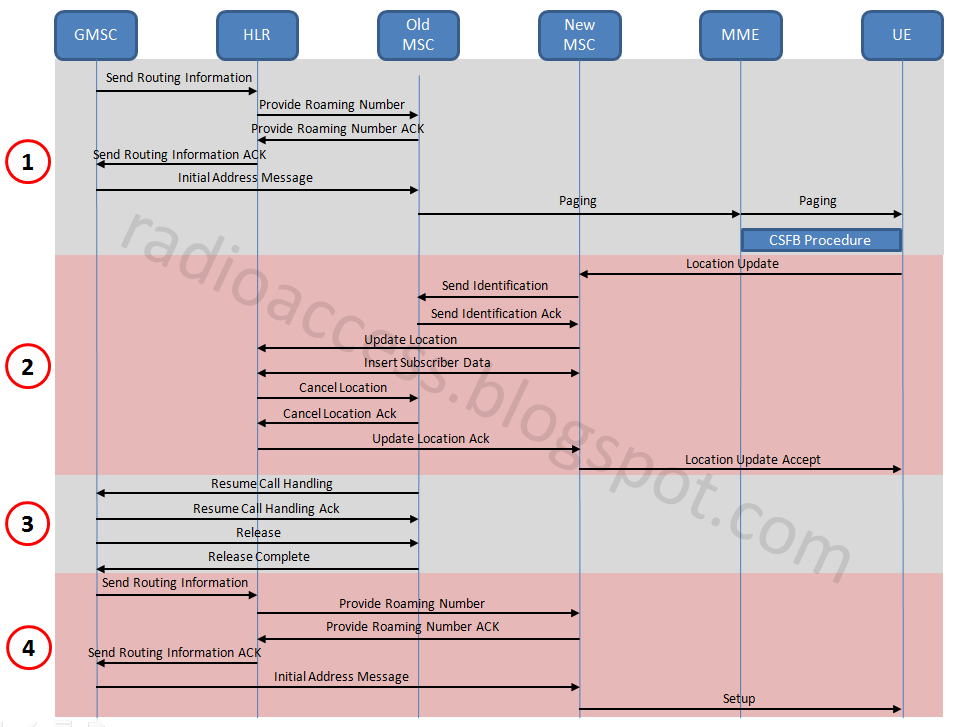

The signalling flow is shown above (click to enlarge) and can be broken down into 4 distinct phases.

During phase 1, a MT call comes through the GMSC which initiates the MAP procedures to inform the MSC the UE is registered on (through the Combined Attach procedure). The MSC contacts the MME through the SGs interface which pages the UE. The paging action initiates the CSFB procedure and the UE is directed towards the target RAT.

Under normal circumstances the UE would fall back on the LA it registered on and would send a Paging Response. In this case however the UE falls back on a different LA and thus has to perform a LAU procedure. This is shown in phase 2, as is the subsequent procedure to transfer the UE subscription from the "old" MSC to the "new" one.

This is also where the call setup procedure would fail as the "old" MSC would not have the ability to inform the GSMC that the UE has changed MSCs.

With MTRR however the "old" MSC informs the GMSC through the Resume Call Handling procedure that the call setup procedure should be repeated by contacting the HLR once more. This is shown in phase 3 of the signalling flow.

Finally, phase 4 is a repeat of phase 1, but this time the GMSC contacts the correct "new" MSC and the call setup procedure is successful.

It is also important to note that during the LAU procedure in phase 2, the UE populated an IE indicating that a CS MT call was in progress. This ensures the new "MSC" keeps the signalling link towards the HLR for the subsequent signalling exchange in phase 4.

Obviously this whole procedure would also add a couple of seconds more on the overall call setup time, which due to the general CSFB procedure is already longer that a "native" CS call setup in 2G/3G.

In the next few posts I will look at the second option, MTRF, and also at a completely different approach that uses an inter working function between the MME and MSC in order to avoid any upgrades in the legacy CS network.